Image via olia danilevich.

Idea

Imagine a multiplayer online game, such as a MOBA, where players choose from a roster of characters or a real-time strategy game with various unit types players can recruit from.

It is exceptionally challenging and tedious to balance the classes or units, so no single strategy becomes overwhelmingly dominant or completely useless. However, the objective of having properly balanced parameters in a game is crucial to make it engaging, with diverse gameplay and -- ultimately -- successful. Not to mention that properly balanced online multiplayer games have the potential to be played in competitive or even e-sport settings.

Imagine a battle encounter in an RPG game, in which you want experienced players to win by a narrow margin. This can be a design goal to create suspense as the outcome remains uncertain until the very end. As the previous one, this task also requires finding the correct set of parameters from a vast, multi-dimensional space of possible values.

In both examples, properly used evolutionary algorithms can support you. More examples of use cases will be provided in the “Designing a Good Fitness Function“ section of this article.

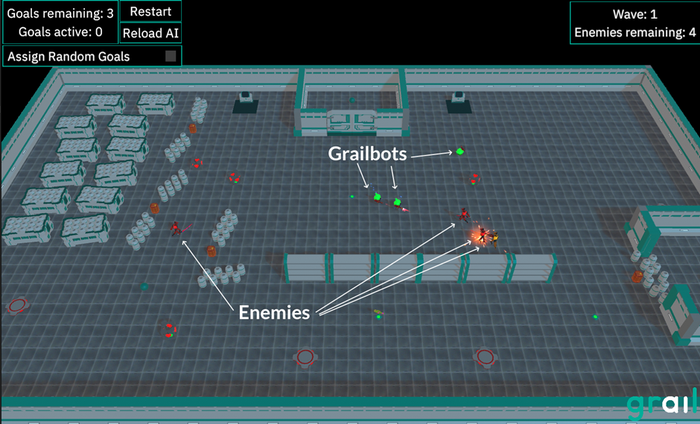

We have conducted a very simple proof of concept. Earlier, before even applying evolutionary algorithms, we had developed a simple demo called Grailbots, which demonstrates one of the techniques offered by Grail. In short, Grail (https://grail.com.pl/) is a middleware designed to implement AI in games using C++ or C#. It is suitable for various engines including custom ones, Unity, or Unreal.

Grailbots showcases the Utility AI technique implemented in Grail. This demo features two teams controlled by AI:

Grailbots - a team of four “smarter” soldiers whose behavior is implemented using Utility AI.

They resemble agents that could also be controlled by humans in a real-game.

Enemies - these appear in three waves and represent simple mobs with straightforward assault behavior, taking the shortest path to the Grailbots. They resemble hordes of zombies or other attacking crowds (although less numerous) as in tower defense and bullet hell games.

Screenshot from the Grailbots demo (simple sample project). In this situation, one Grailbot has already been killed by the Enemies.

Screenshot from the Grailbots demo (simple sample project). In this situation, one Grailbot has already been killed by the Enemies.

For this proof-of-concept, our aim was to select the set of parameters of Grailbots and Enemies, such that the Grailbots would typically win by the smallest possible margin. Ideally, the best outcome would be having just one Grailbot surviving with 1 HP after the three enemy waves.

This objective may sound artificial, but our idea was to test whether a design goal of this complexity could be feasibly achieved automatically using evolutionary algorithms.

In the following sections, we will continue to explore this use case but first let us provide a short background on this area. The Grailbots example will be continued in the final section.

Brief Introduction to Evolutionary Algorithms

Evolutionary algorithms (EA) draw inspirations from nature. In particular, the fundamental concepts that underpin their idea are natural selection, adaptation to the environment and survival of the fittest. These concepts were introduced in 1859 by Charles Darwin in a book titled “On the Origin of Species by Means of Natural Selection, or the Preservation of Favoured Races in the Struggle for Life”.

The classic way of solving problems on computers involves writing sequences of specific instructions. This significantly differs from evolutionary algorithms, which are an example of an Artificial Intelligence (AI) technique, albeit not as popular in recent years as Machine Learning. In EAs, as well as in broader AI, programmers only specify some measure of the quality for the end result without explicitly programming the way to achieve it. The solution is found automatically using a general, problem-agnostic approach.

They also belong to subclasses of AI called:

Computational Intelligence

Metaheuristics

Evolutionary Computation

In EAs, a population of potential solutions to a problem is maintained. The initial population contains weak solutions, often generated randomly or using a simple heuristic. These potential solutions are then subjected to a process mimicking natural evolution. They can reproduce and mutate. The new offspring replace some or all of the previous generation, forming a new population. There is also a concept of fitness, which plays a key role in selection of the individuals for the new population. Some of the basic notions you should know are presented in the next section.

The evolutionary computation encompasses several techniques inspired by natural evolution. For example:

Evolutionary algorithms: the topic of this article

Genetic algorithms (GA): similar to EAs, but they assume a binary-string encoding

Evolutionary strategies: iterative search method, which is often based on evolving a single solution

Genetic programming: evolving computer programs; they use dedicated representations

Memetic algorithms: also referred to as cultural algorithms, they combine GAs or EAs with local search

Differential evolution: a R^n -> R function optimization technique, which uses specific methods of combining existing solutions.

Basic Terminology

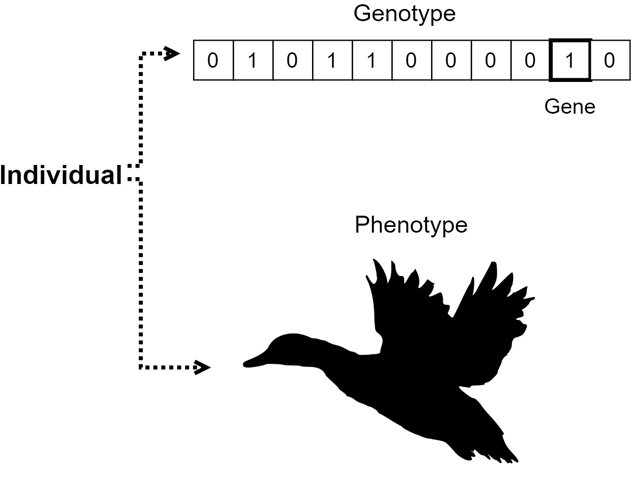

Individual - represents a single complete candidate solution to a problem that is solved using evolutionary computation. Individuals form a population and undergo evolutionary operations.

Genotype (chromosome) - represents the genetic encoding of the parameters of the individual. This is the representation used for evolutionary operations. For example, in genetic algorithms, a binary string is a commonly used encoding. One of the benefits of such an encoding is the ability to use the standard, well-established genetic operators with it.

Gene - a fundamental unit and building block of a genotype. Genes are typically located at fixed positions within a chromosome like cells in a vector.

Phenotype - this is the decoded representation, which is the actual form in which the solution is used in the real world. This representation is also referred to as “expressed” or “observable”. It contains features that are observable consequences of a particular genotype. Using the analogy to nature, while the genotype can be likened to a DNA sequence, the phenotype is the actual living organism that possesses this DNA sequence.

Population - a collection of individuals that together undergoes the evolutionary process. Individuals within the population compete with each other and may recombine. In other words, a population represents all potential solutions maintained at a given moment in the algorithm.

Fitness - assigned to individuals, is the numerical assessment of the quality of the candidate solution represented by the individual. Fitness is calculated by the fitness function and is typically defined as a floating-point number.

Crossover (recombination) - an operation that combines genetic information from two (or more in rare cases) parent individuals to create one or more offspring, analogous to biological reproduction. Many common crossover operations have been proposed. Most of them combine parts of the parents' encodings in some way with stochastic elements such as choosing the crossover point at random. Typically, fitter individuals (with higher fitness) have a higher chance of being selected for reproduction. Thus, crossover is often associated with exploitation, as it aims to exploit parts of the most promising candidate solutions.

Mutation - another genetic operation that introduces small random changes to individuals. Unlike crossover, mutation requires only one parent, subsequently mutates. Also, unlike crossover, mutation is typically applied with a very small probability (e.g., 0.05 - 0.1) to each individual. Mutation is often associated with exploration as random alterations to the encoding enable exploration of unknown regions in the solution space.

Children (offspring) - new individuals created through the application of genetic operations (crossover and mutation) to parent individuals.

Selection - the procedure of choosing individuals from the current population, including the results of mutation and recombination, for the next population. The next population will serve as parents in the next iteration. There are many selection methods, but most of them select individuals with higher fitness with higher probability.

Elitism - a mechanism that allows the best-evaluated