Introduction

This was originally posted on my blog at http://www.crazygaze.com/blog/2016/03/17/how-strands-work-and-why-you-should-use-them

If you ever used Boost Asio, certainly you used or at least looked at strands.

The main benefit of using strands is to simplify your code, since handlers that go through a strand don’t need explicit synchronization. A strand guarantees that no two handlers execute concurrently.

If you use just one IO thread (or in Boost terms, just one thread calling io_service::run), then you don’t need synchronization anyway. That is already an implicit strand.

But the moment you want to ramp up and have more IO threads, you need to either deal with explicit synchronization for your handlers, or use strands.

Explicitly synchronizing your handlers is certainly possible, but you will be unnecessarily introducing complexity to your code which will certainly lead to bugs. One other effect of explicit handler synchronization is that unless you think really hard, you’ll very likely introduce unnecessary blocking.

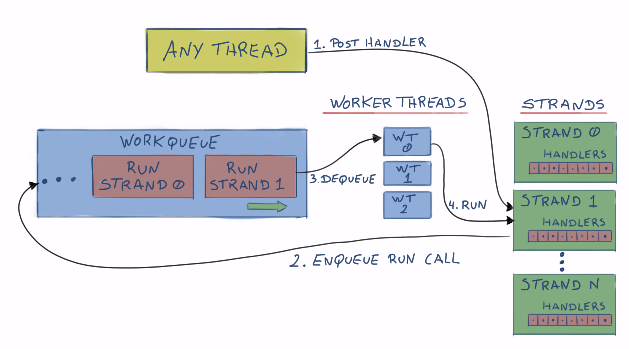

Strands work by introducing another layer between your application code and the handler execution. Instead of having the worker threads directly execute your handlers, those handlers are queued in a strand. The strand then has control over when executing the handlers so that all guarantees can be met.

One way you can think about is like this:

Possible scenario

To visually demonstrate what happens with the IO threads and handlers, I’ve used Remotery.

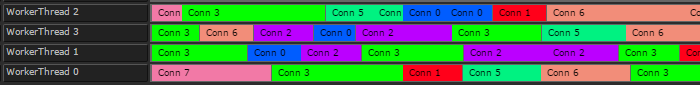

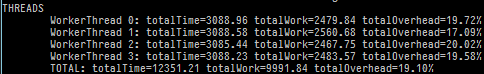

The code used emulates 4 worker threads, and 8 connections. Handlers (aka work items) with a random workload of [5ms,15ms] for a random connection are placed in the worker queue. In practice, you would not have this threads/connections ratio or handler workload, but it makes it easier to demonstrate the problem. Also, I’m not using Boost Asio at all. It’s a custom strand implementation to explore the topic.

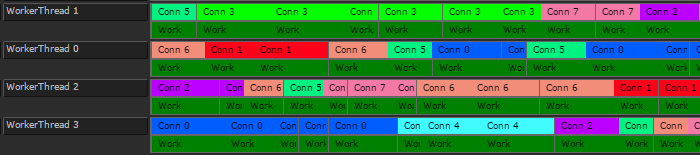

So, a view into the worker threads:

Conn N are our connection objects (or any object doing work in our worker threads for that matter). Each has a distinct colour. All good on the surface as you can see. Now lets look at what each Connobject is actually doing with its time slice.

What is happening is the worker queue and worker threads are oblivious to what its handlers do (as expected), so the worker threads will happily dequeue work as it comes. One thread tries to execute work for a given Conn object which is already being used in another worker thread, so it has to block.

In this scenario, ~19% of total time is wasted with blocking or other overhead. In other words, only~81% of the worker thread’s time is spent doing actual work:

NOTE: The overhead was measured by subtracting actual work time from the total worker thread’s time. So it accounts for explicit synchronization in the handlers and any work/synchronization done internally by the worker queue/threads.

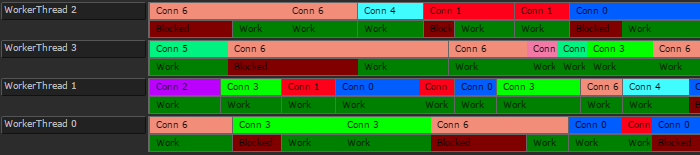

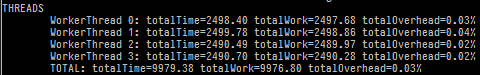

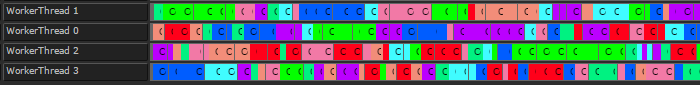

Lets see how it looks like if we use strands to serialize work for our Conn objects:

Very little time is wasted with internal work or synchronization.

Cache locality

Another possible small benefit with this scenario is better CPU cache utilization. Worker threads will tend to execute a few handlers for a given Conn object before grabbing another Conn object.

Zoomed out, without strands

Zoomed out, with strands

I suspect in practice you will not end up with handlers biased like that, but since it’s a freebie that required no extra work, it’s welcome.

Strand implementation

As a exercise, I coded my own strand implementation. Probably not production ready, but I’d say it’s good enough to experiment with.

First, lets think about what a strand should and should not guarantee, and what that means for the implementation:

No handlers can execute concurrently.

This requires us to detect if any (and what) worker thread is currently running the strand. If the strand is in this state, we say it is Running .

To avoid blocking, this means the strand needs to have a handler queue, so it can enqueue handlers for later execution if it is running in another thread.

Handlers are only executed from worker threads (In Boost Asio’s terms, a thread runningio_service::run)

This also implies the use of the strand’s handler queue, since adding handlers to the strand from a thread which is not a worker thread will require the handler to be enqueued.

Handler execution order is not guaranteed

Since we want to be able to add handlers to the strand from several threads, we cannot guarantee the handler execution order.

The bulk of the implementation is centered around 3 methods we need for the strand interface (similar to Boost Asio’s strands )

post

Adds a handler to be executed at a later time. It never executes the handler as a result of the call.

dispatch

Executes the handler right-away if all the guarantees are met, or calls post if not.

run