On May 12th 2016, Epic Games hosted the inaugural Game UX Summit in Durham (NC) – which I had the immense pleasure and honor to curate – to discuss the current state of User Experience in the video game industry. This event brought together fifteen renowned speakers from various UX-related disciplines: Human Factors, Human-Computer Interaction, UX Design, User Research, Behavioral Economics, Accessibility, and Data Science. The keynotes speakers were the esteemed Dan Ariely, and Don Norman, who popularized the term UX in the 90s.

Below is my summary of all the sessions (with the edit help from Epic Games’ UX team members – special thanks to Ben Lewis-Evans and Jim Brown!). You can also watch most of the sessions here. The Game UX Summit will come back in 2017: Epic Games is partnering with Ubisoft Toronto who will be hosting next year’s edition on October 4-5, 2017. If you wish to receive updates about the event or if you would like to submit a talk, you can sign up here and be on the lookout for #GameUXsummit on Twitter.

Each session, in order of appearance (click to jump to a specific session directly):

1- Anne McLaughlin (Associate Professor in Psychology, NC State University)

2- Anders Johansson (Lead UI Designer, Ubisoft Massive)

3- Andrew Przybylski (Experimental Psychologist, Oxford Internet Institute)

4- Ian Livingston (Senior User Experience Researcher, EA Canada)

5- Education Panel (Jordan Shapiro, Fran Blumberg, Matthew Peterson, Asi Burak)

6- Chris Grant (User Experience Director, King)

7- Ian Hamilton (UX Designer and Accessibility Specialist)

8- Steve Mack (Researcher and Analyst, Riot Games)

9- David Lightbown (User Experience Director, Ubisoft Montreal)

10- Jennifer Ash (UI Designer, Bungie)

11- Dan Ariely (Professor of Psychology and Behavioral Economics, Duke University)

12- Don Norman (Director of the Design Lab, UC San Diego)

1- Anne McLaughlin, Associate Professor of Psychology at NC State University

“Beyond Surveys & Observation: Human Factors Psychology Tools for Game Studies”

Watch the video

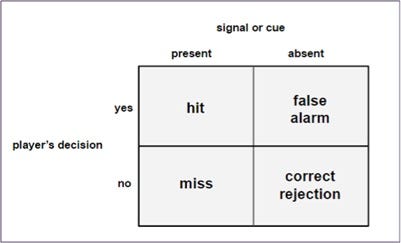

Anne McLaughlin kicked off the summit by talking about how a Human Factors method could be applied to measure and manipulate game experiences, without the cognitive biases that inevitably occur when we only use intuition or gut feeling. Signal detection theory, a classic of Human Factors, is one such method. Signal detection measures any game element you want – or don’t want – your players to attend to, such as elements of the HUD, the interface, or icons. Any time players have to make a decision under uncertainty is when signal detection theory can be used to help designers accomplish their goals. Signal detection theory is summarised in the figure below.

The Spy character from Team Fortress 2 offers an example where signal detection was made deliberately difficult (low salience cues) so players cannot easily spot a spy who has infiltrated their team. Anne went on to describe how to use Signal Detection Theory to test the impact of design decisions and shape player behavior. It is generally easy to detect hits and misses when a signal is present. For example, if a Spy has indeed infiltrated the team (signal is present), developers can easily analyze if the player’s decision was correct (hit – identify the spy and shoot) or wrong (miss – not recognizing a Spy is present).

However, false alarms are harder to detect and design around (e.g. when players believe there is a Spy but there is none) and so are correct rejections, even though these measures are just as important to the game experience as the hits or misses. For example, when developers want to make sure that players do not miss a signal, the tradeoff will be an increase of the false alarm rate, which can lead to player frustration. On the other hand, developers can use false alarms to increase tension, such as in a horror game (e.g. Until Dawn). Therefore, developers should consider and measure false alarm rates to inform design decisions.

In video games, the overall design can drive players to make important decisions. For example, games that offer health restoration and no friendly fire will encourage a liberal response strategy, where almost anything is worth shooting at, thereby increasing the false alarm rate. In games like Counterstrike on the other hand, the design encourages a more conservative signal detection because of the peer pressure of not harming your teammates and the lack of health restoration.

Anytime a person has to make a decision under uncertainty – find a signal in the noise – Signal Detection Theory can be used. So, consciously or not, game designers are manipulating the four quadrants (hit, miss, false alarm, correct rejection) by associating benefits or penalties with each of them. This has also implications on the art direction, because, as explained by Tom Mathews in his 2016 GDC talk, the color of the environment can have an impact on how easy it is to detect enemies depending on what color team they are. Therefore, the blue team or the red team can be disadvantaged depending on how well they stand out.

Anne concluded her talk by pointing out that she is not suggesting that games should be made easier. Rather, the whole point of thinking in terms of Signal Detection Theory is to ensure that gameplay matches with designer’s intent. How easy or how hard is up to them: they control the signal and the payoffs.

2- Anders Johansson, Lead UI Designer at Massive Entertainment

“My Journey of Creating the Navigation Tools for The Division: Challenges and Solutions”

Watch the video

Anders Johansson shared some UI design thinking from The Division; a third person co-op experience in an open world with a heavy focus on RPG elements, or – as Anders summed it up – a game where players run around a lot trying to find things. As such, Anders’ talk largely focused on the design iterations that were carried out on the navigational user interface elements in the game.

In early development, the team tried three different map prototypes: one with a top-down camera, one with a free camera, and one with a player-centric camera that used the character as the reference point. Initially they chose to go with the player-centric design because it gave the player a clear reference point to where they were in relation to the world around them through a direct camera-to-world camera mapping.

Johansson said that the UI team thought they had nailed the map prototype at that point, but it turned out to have critical UX issues. One of the reasons for this was that the game that shipped used a completely different camera system than the version that the map research was based on, and these camera differences left many of the map team’s decisions irrelevant or inadequate.

In order to address this, the UI team had to rework the map functionality, and learn to adjust it as the game progressed. As an example of how dramatic the changes were, the camera used to orient itself around the player, but in the shipping build, the map always orients North to avoid any directional confusion. The new map also has contextual elements (such as buildings) to help the player orient themselves, and the camera can even detach from the character to allow a full-screen exploration of the map. The team decided to remove functionality that allowed the player to run around with the map open because with the new camera system, players could not see their feet anymore. Anders’s core message here was that as a game evolves, the UI has to evolve with it based on the changing results of UX data.

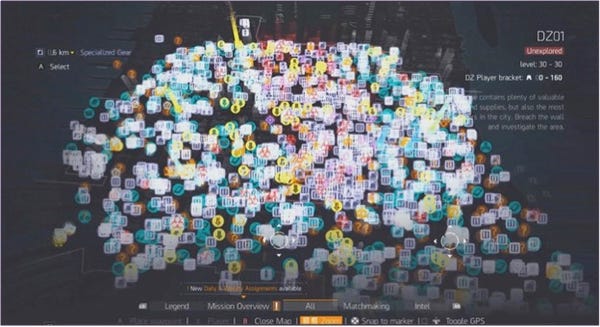

Another challenge Anders and the UI team faced was how to present overwhelming amount of information that was available on the map. There is a lot to do in the game, and without any filters the map would look like this:

To get around this, like in many other games, The Division uses filters on the map. At first, the UI team allowed players to filter each individual map element, but playtest data and eyetracking heatmaps revealed that most players were unaware of this feature, and the players who were aware of the feature reported feeling overwhelmed by the amount of options they had. The UI team tried to find solutions from other games, but didn’t find any that were suitable for their cases until they looked at solutionsoutside of games, specifically from Google Maps which automatically filters content based on the level of zoom. This revelation led to the idea of The Division’s adaptable filter system.

After the core of the map was done and the main features were in, Anders noted that there were still some challenges left to solve. The most important being: what happens when players close the map? How can the game help players find their bearings without requiring them to continually open and close the map? To address this, the UI team tried to give players locational information, such as street names at intersections. Johansson noted that while players were able to read the signs, this method was perceived by players as a slow and cumbersome way to navigate. So the UI team tried adding key landmarks as points of interest (such as the Empire State Building), but this didn’t really work either because spatial awareness works differently in games (where players don’t look around that much and focus mainly on the reticule) than it does in real life. Next, the team gave the player an industry-standard 2D marker to follow on screen, but eye-tracking studies revealed that players did not really see the marker, or lost it quickly when they did see it, because it was not in a central position on the screen. Ultimately, the team decided to try a combination of ideas: they used an on-screen GPS marker that mirrored the marker on the mini-map, that was easier to see, and that included street names.

Anders said that they also added a “breadcrumb system” for indoor and in-mission navigation, that consisted of a 2D pulsing icon (to draw players’ attention), that moved toward the objective, and that left a trail behind it as it moved.

To conclude, Anders and the UI team tested many UI prototypes for navigation and only kept the most elegant and functional solutions, proving that nothing is easy, and that everything can always be improved.

3- Andrew Przybylski, Experimental Psychologist, Oxford Internet Institute

“How we’ll Know when Science is Ready to Inform Game Development and Policy”

Watch the video

Andrew Przybylski presented a metascience perspective in his talk. He questioned how scientific research is carried out and the quality of scientific rigour, both in academia and the video game industry. Using scientific methodology implies that through a systematic view, observation and testing are rigorously conducted to understand the reality we live in and get closer to the truth. Having a hypothetico-deductive scientific process implies starting by generating hypotheses and research questions, designing a study to test the hypotheses, collecting data, analysing and interpreting the data against the hypotheses, and then publishing and sharing the results. This is the ideal of science and how it should work. Przybylski noted that in reality, however, published scientists are suspiciously successful at confirming their own hypotheses. Andrew pointed out that if you look at all these positive findings more objectively, you’ll find that in psychology, neuroscience, political science, cancer research, and many other research fields, studies suffer from a serious lack of replicability.

So why do we have this replicability crisis? According to Andrew, when looking at published work, many studies don’t have enough statistical power to accur